Theme: Building Innovative 3D Interfaces with Magic LeapWe tend to design interfaces for flat screens. With Magic Leap you're free to build interface components in a 3D setting and interact with it visually (including eye tracking), hapticly (through tactile vibrations), and aurally (spatialized sound). The Meetup too place at RLab on July 24th. Below is a recap of the event. Also visit our NY Magic Leap Meetup homepage. Presentation Slides

Evolving Technologies Corporation - Grand Prize Winner in the Bose AR @ Company NYC Pitch Challenge6/15/2019

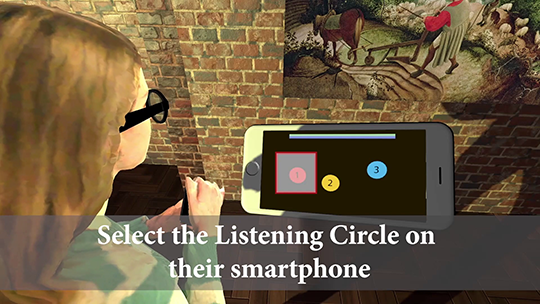

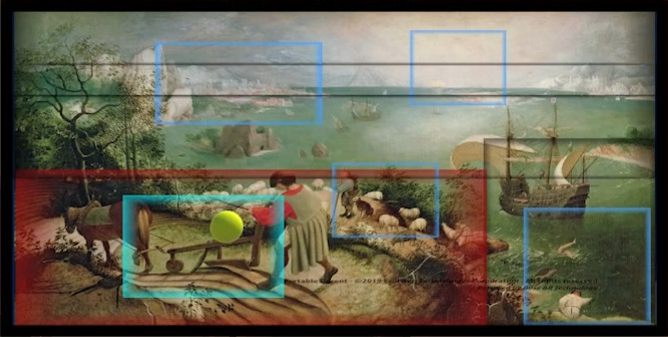

Recently, Bose (the same people known for their noise cancellation headphones) and Company.co (formerly Grand Central Tech) partnered to sponsor a competition for the most innovative and promising apps for the Bose AR Frames. We are pleased to announce that we are a grand prize winner in this competition. Josh Pratt and I developed Portable Docent - a Unity app that combines spatial awareness and dynamic audio content to create an immersive and responsive audio experience for museum visitors. It is built using Bose AR technology. Through some fancy algorithms the app accurately tracks the portions of a painting a museum visitor is gazing at, and dynamically orchestrate ambient soundscapes and voice overs to create a rich immersive audio experience. About the AR FramesUnlike most augmented reality devices, the Bose AR Frames omit any augmented vision features. So, what in the world makes these glasses special? For one thing, they tag team with your (Android or IOS) phone. Having the phone in constant contact with the Frames opens the door to numerous kinds of uses. The Frames have speakers that project high quality sound into your ears. There's a built-in microphone. You can make and receive calls through the frames. They respond to gestures like double-tapping on the side of the frame, gesturing with a Yes motion head nod, as well as shaking your head from side to side. Aside from a long lasting battery, the Frames have accelerators, gyroscopes, and magnetic compass. The Frames easily fit over regular eyeglasses. They are designed for long lasting comfort. Oh, just one more thing ... Bose has wisely elected to incorporate the same kind of hardware sensors in the Quiet Comfort QC35 II noise cancelling headphone as well as every new headphone, like the new Bose 700. The implications of this are enormous, as there already are millions of AR capable headphones out there. And what is the cost to the Bose customer who already has an AR capable headphone? Zippo. Nada. Nothing. This translates to easy adoption and use. How the App StartedThe requirements for our app- Portable Docent is relatively straightforward in design, but not so simple from an implementation standpoint. The app has to make it easy for someone who has the Bose frames to walk up to a museum painting, look at different parts of the painting and be immersed in ambient/atmospheric soundscapes as well as listen to voice overs based on the portions of the painting that you're gazing at. To make this work seamlessly requires a bit of ingenuity. The Bose Frames can accurately track orientation; so they know how much you are looking up, down, turning left or right. They don't know your physical location. We addressed this from a number of approaches including the use of bluetooth beacons, and using the Kinect. The challenge with these is that the setup is far too complicated. And using the Kinect there are limitations on the number of people that could be simultaneously tracked, occlusion issues, and more. We opted instead to go for a simpler approach that involves identifying a few reference points, and from there everything is automatically triangulated. No fuss. No muss. You just put on the glasses and enjoy. An early rig for projecting paintings of various sizes CalibrationWe developed two methods of calibration. One entails a set of geometrical computations and measurements so that a museum visitor can enter their approximate height when first starting the Portable Docent app, step inside a "Listening Circle" on the floor, tap to select the Listening Circle they are in and immediately begin the audio enhanced painting experience.. Our second calibration method- Quick Mode literally takes under 10 seconds to calibrate to any painting from any viewing location. Audio EnvironmentWe have two kinds of regions, voice overs and ambient soundscapes. These region can be positioned and sized to create the desired audio experience. Aural information is a natural part of our environment, but audio information delivered on a device is usually separate from what we see. Why not let the audio curate the visual? It's exciting to see where this technology is heading. Clearly, there are some areas where we're breaking new ground.

In recent months I've been doing a lot with drones. I have my FAA (Part 107) Drone Pilot License and am creating software tools for aerial photogrammetry. At some point I'll post an entry about my serious work with drones, but in this post I want to write about something much more fun; having to do with controlling an indoor drone with Magic Leap purely through hand gestures.

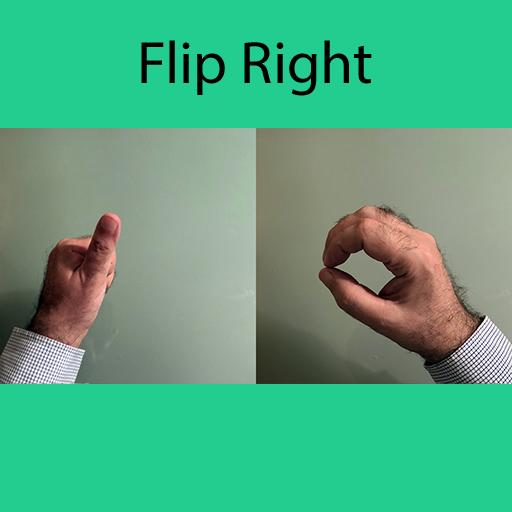

A couple of months ago there was a hackathon (the Unity/NYC XR Jam, for which my entry was awarded the best AR application) that took place at R-Lab in the Brooklyn Navy Yard. During that weekend I built a Magic Leap application with Unity to pilot a drone purely through 14 hand gestures like the one shown here:

The drone used is an educational drone primarily designed for indoor use that is known as the Tello Drone (manufactured by Ryze).

The Tello costs about $100. The drone is remarkable for its price. If you want to start playing with drones for recreation this would be a great first experience. Most significantly from my standpoint, it has a well defined SDK.

In any case, here is a video showing the drone in action purely managed through hand gestures.

It is not everyday that you get to take something exciting like the Magic Leap One and creatively use it for fundamental social needs that are all too often relegated to an afterthought.

We are developing an assistive indoor visual navigator to aid persons with blindness/low vision. The nature of the Magic Leap One coupled with our application could be a game changer for the large number of veterans in rehab, the scores of corporations seeking workplace ADA Compliance, and for young adults having to build up their Orientation & Mobility skills. The Magic Leap device is uniquely suited for this application as it is a fully self contained general purpose computing/sensory platform. Our working MVP- the Assistive Vision Navigator exploits depth mapping, audio, haptic feedback and instant calculations so a person with blindness can simply ask for example, "How far is the wall?" and get an instant spoken answer. The video demo below shows an early working version.

For those interested, we will be presenting at the upcoming CSUN Assistive Technology Conference in California on March 14, 2019.

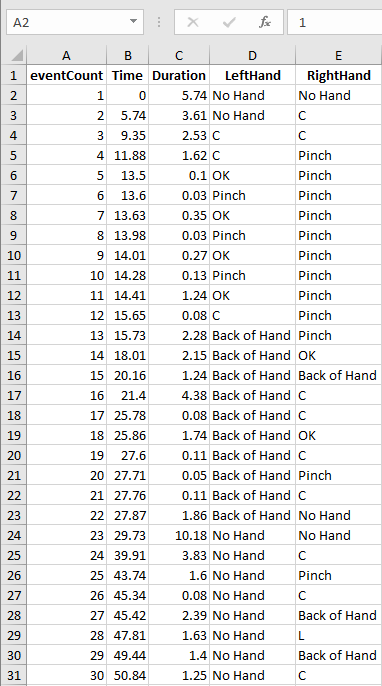

Further information can be found at: https://www.csun.edu/cod/conference/2019/sessions/index.php/public/presentations/view/1309 https://www.csun.edu/cod/conference/2019/sessions/index.php/public/conf_sessions You can also contact us in the form below: Magic Leap has been in the press for a number of years now. They got investors to part with a lot of $$$ and pour it into a startup for a completely new kind of technology. There had to be something really compelling for this to happen. Even as the Magic Leap One has just been released into the wild, the company has made clear that this is just an interim product intended for developers, early adopters, and enthusiasts. So why not wait for the product to sport improved features like wider field of view, offer a wealth of Apps, and of course, become more affordable? I will answer it in two short words: Spatial Computing. What Magic Leap has done is to rethink computing so that the immersive environment is really as much an operating system as it is a platform for running apps. They took to heart the notion of seamlessly melding the virtual created content with the real world so that the two are not perceived separately. In that sense, the term MR for Mixed Reality (or as I sometimes think, "Melded" Reality) is more appropriate for the Magic Leap than AR or XR. Instead of extolling the virtues or ranting about the product or technology, I'd like to delve into some of the ideas behind Magic Leap, why you should take interest in it, and where it may go. This is not your father's VR gear At first glance you may think Magic Leap is out to make a fashion statement. Perhaps it is, but the statement has to do with functional design. To begin with, you'll notice the visor is positioned above and not over the ears. There are several reasons for this. Magic Leap incorporates builtin headphones that can take advantage of Resonance Audio, Should you prefer to use your own headphone or earbuds, you can easily do so, as the Light Pack allows you to connect a standard 3.5mm jack. There is another not so obvious benefit to having the visor extending above the ears. It permits you to comfortably blend the ambient sounds in your environment with the audio output from the Magic Leap device. In VR you want to shut out the world, but in AR having the ambient sounds as they naturally occur is a plus. Input, Sensors and Navigation .It is fascinating how we so often derive from nature to inspire technology. Perhaps Magic Leap wasn't influenced by jumping spiders, but you have to admit that there's an uncanny similarity in the way biological and electronic sensors are assembled. Mapping your physical space First came the Tango tablet, then the Hololens and now Magic Leap. What characterizes these devices is that they know where they are in physical space. What's more, they can handle occlusion mapping dynamically. The video below shows the Magic Leap creating building a geometric mesh as you walk around. The color bands depicts the current distance from your view, and therefore the color of the mesh pattern can change as you move closer or farther away from the generated mesh. Mapping Hand Gestures One of the features of the Magic Leap One is the ability to track hand gestures, which includes a single finger, making a fist, pinching thumb and forefinger, a thumb's up, using the forefinger and thumb to form an "L", the back of an open hand, and "OK" gesture, and forming a "C" shape. The video below shows this in action. Notice that the Magic Leap easily distinguishes between right and left hand, and can simultaneously track both hands. In the video you will see various hovering colorized balls. These indicate the relative positional mapping of the hand fingers and joints. As the captured video is not 3D (default video capture is from the left eye), the placement of the colorized markers don't quite match what you would see while wearing the Magic Leap. All these features are easily programmable, as the Magic Leap SDK provides a fairly rich and easily accessible API (Application Programming Interface). This API works with Unity, Unreal, and Helio- a Magic Leap web browser. I will outline this in a future blog post. For the moment, I want to stay with the theme of Magic Leap sensory input features. Another important aspect with Magic Leap is the ability to capture and perform data analytics. The Magic Leap API exposes internal sensor data sufficiently so I can write a program to produce a log file like the kind shown below which is from one of the sessions involving hand gesture recognition. Eye of the Gazer One of the modalities of user input is the ability to actively perform eye tracking. I am not talking about head pose, but rather what you are actually looking at as your eyes roam your field of view. The video below shows this in action. In this rudimentary example I thought it would be useful to basic eye gaze activity in action. Unconscious eye gaze can influence behavior in the rendered scene. As the user glances at the cube on the right it turns red and rotates and while the user gazes at the sphere it turns green. These are simple static objects. We could also affect the animated behavior of avatars. We could influence the plot line in an interactive story. We could create new kinds of user interfaces. We could create training software for special needs such as for recovering stroke patients. We could combine eye gaze with voice input to do something like "Make that one red" where 'that one' is the object we are looking at. More to follow There's lots more I have to say about Magic Leap and will be doled out in future installments. In the meantime, we have an active meetup group (https://www.meetup.com/NY-Magic-Leap-Meetup) that you are welcomed to join.

In this demo I show two sets of objects- an animated gearbox and an engine which can be viewed in X-Ray mode. In both sets of objects I can cycle through selecting individual parts by tapping on the buttons located near the bottom left corner of the iPad.

Notice that as each item is selected, the corresponding text label appears in the panel. We love to experiment and push the envelope with new technologies. ARKit is proving to be an exciting development in the world of AR and VR.

We built an AR application in Unity where we overlay a 3D map of midtown Manhattan. Notice how smooth the positional tracking is with the iPad.

On June 19th- 20th Chris and I had the pleasure of poring over the ins and out of Hololens technology at the Microsoft HoloHack-NYC.

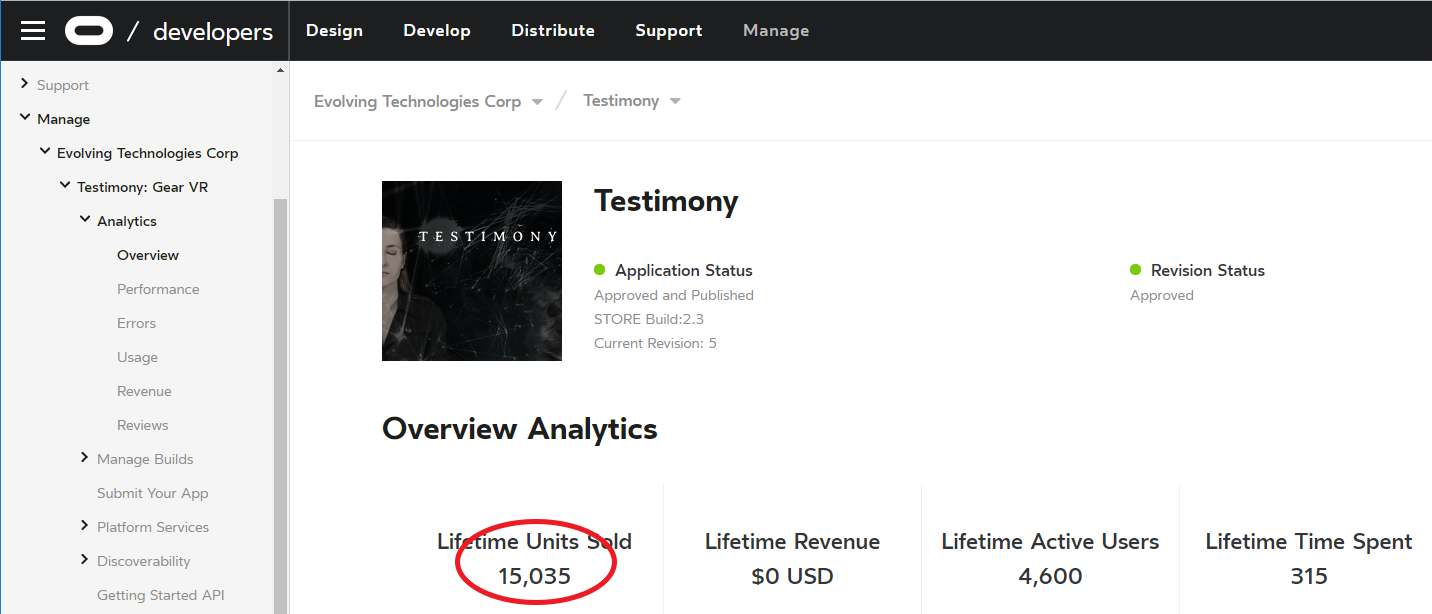

We wanted to make a project that took advantage of as many features of the HoloLens as possible. However, the most interesting one for us was the spatial mapping. In Augmented Reality you want people to explore the space around them and have the application change based on their location. We decided to build a fire fighter simulator. We used the spatial processing to discover all the flat surfaces in the area you are in and virtually set them on fire. Each of those fires also had spatial audio on them to help you find them in the space. Voice commands were used for activating the fire hose based on the keyword "fire" -- which is where the name comes from. The user ends up walking around the space, screaming the word fire over and over to put out the fires around them. For those people that were too shy to scream fire, they could also air tap on the fires to put them out, taking advantage of the gesture recognition. Here is a sample video capture of the application: Those of you who would like to play around with the application can go to our GitHub site. At the end of the hackathon we had a fun time demoing the App for a bunch of students from Riverdale. Since last summer we started a collaboration with storyteller Zohar Kfir building VR experiences. Our collaboration eventually led us to do a VR project sponsored by Oculus Studios and produced by Kaleidoscope VR - called Testimony. Testimony is an interactive documentary for virtual reality that shares the stories of five survivors of sexual assault and their journey to healing. Testimony is an advocacy platform to allow the public to bear witness to those who have been silenced. The world premiere was at Tribeca Film Festival in April. We published "Testimony" on the Oculus Store for both Gear VR and Rift, on June 1st. As of the date of this post (6/13/2017) It's already topped 15,000 downloads. The Made in NY Media Center wrote a nice blog post about this project: nymediacenter.com/2017/06/loren-and-chris-developing-virtual-reality-for-social-impact/ We recently built a virtual tour experience of Markthal Rotterdam - a recipient of the 2017 VIVA International Awards for the most cutting-edge design housing residential apartments with one of The Netherland’s largest food markets. The VR experience was exhibited at RECon 2017 at the Las Vegas Convention Center. A number of visitors to the VR Zone inquired about how we put together the Markthal VR application. We'd like to give you a sneak peak under the hood. Markthal VR Our process for creating virtual tours entails a four step process:

For clients new to VR and AR experiences we first like to show what can be expected in a finished product. Typically this involves demonstrating our previous work on similar applications. Then we work with the client to outline the key elements for their custom application. Clarity in the VR experience and the kind of user interaction/user experience is what makes it possible to deliver a solid and complete application on time and on budget. For this application we wanted to create an authentic experience of what it's like to visit Markthal Rotterdam and explore its many facets. The concourse alone is over 100,000 sq ft. To start this project we set out a few guidelines to direct our development. We combine client feedback with our own experience creating VR experiences. For Markthal we compiled a short list of design constraints:

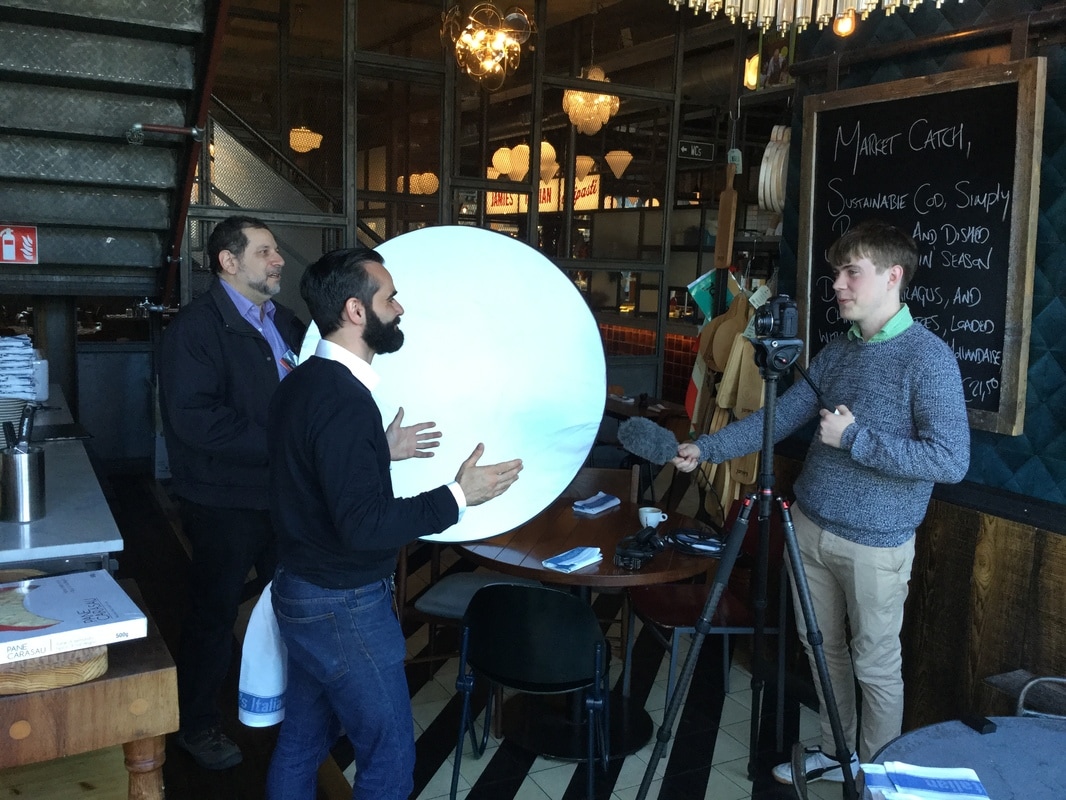

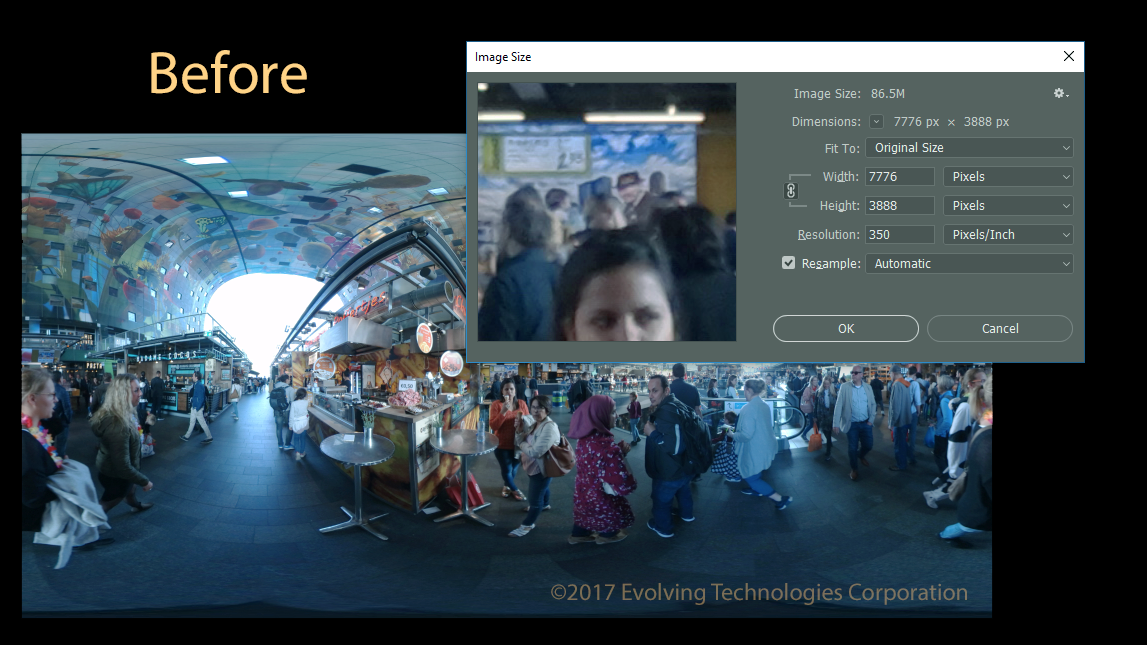

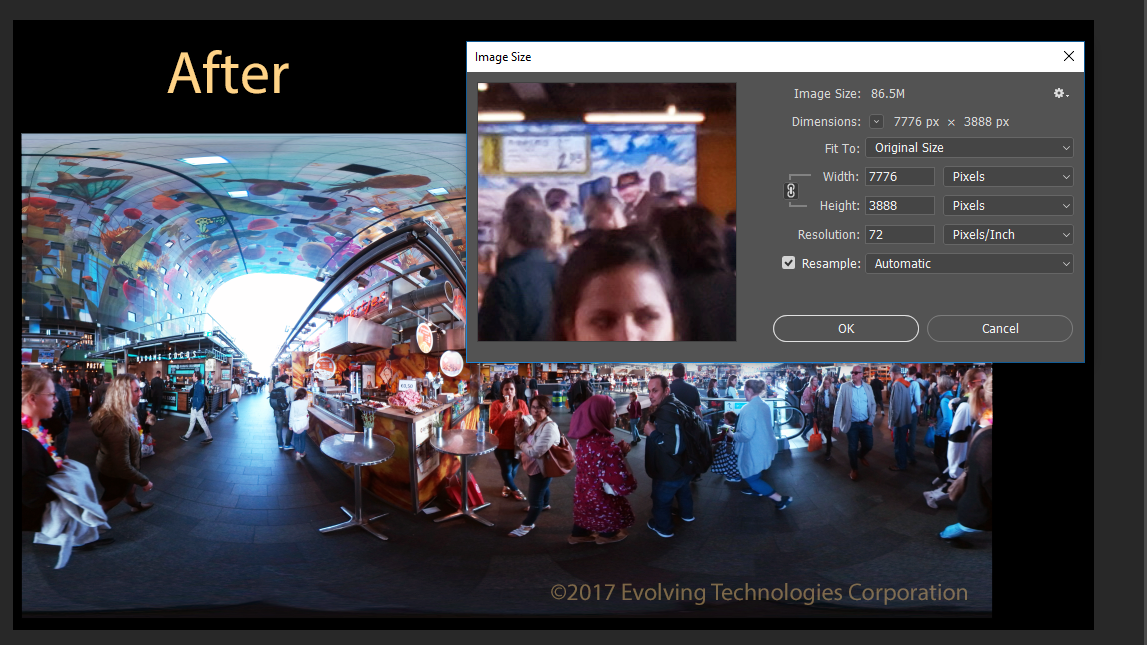

Ease of use was most important. To simplify control in the VR space we decided that all user interactions would either be done through Gaze or a single tap. To provide feedback to the user that the gaze activation is working, each object that can be looked at always reacts to your gaze. To better capture the experience of wandering through Markthal we build a simplified model of the space. We combine architectural drawings and observations of the space to map out all of the locations for the experience. Movement through the virtual space in most natural by jumping from one location to the next by line of sight. This avoids visual clutter and encourages the user to explore. Considering the volume of people at RECon 2017 we also didn’t want people staying in the VR experience for too long, creating lines. We designed the App so that it could be easily reset, instantly ready for the next person. To gracefully end the experience for users that have spent too much time, we built in a 10 minute time out. Ahead of the shooting we scout out the location so that we can reconstruct in VR all the images, videos, sound recordings and other features. We arrived with film crew in Rotterdam for video interviews of the property developer and shop owners. After three and a half days in Rotterdam we're on the plane headed back to New York. We now have 8 days to turn all the captured footage and recordings into our VR experience. We captured approximately one hundred and seventy 360 images. Every image file was curated for color balance, brightness, contrast. Here is a sample of the Before & After. With each image we also captured ambient audio for that location. The audio was mapped to a location on a virtual model of Markthal resulting in full spatial audio as you explore the space. To navigate around the space we let you teleport from one location to the next. To allow that we use a gaze activated icon. The icons are placed in the space giving depth, so farther icons appear smaller and closer icons are bigger. We use XpressVR - a custom tool designed in house to help us rapidly build the environment and place all of the assets into the space. To keep users from ever being lost and give them quick access to new locations we made it easy to access the main menu. With a single tap the user can jump back to the main menu and explore a new location. Perhaps the most fun part of building the application was creating the arch with the magnificent ceiling art. Lastly, it comes time to press a magic button that deploys the App onto the VR device (in this case, the Samsung Gear VR). We are always looking for new projects and interesting ideas. If you have an idea for a VR application for your industry or would just like to chat about the possibilities of using VR in your industry, reach out to us. Please sign up to attend: RSVP HERE Meetup takes place at the |

AuthorLoren Abdulezer Archives

July 2019

Categories |

||||||

Copyright © 2022 Evolving Technologies Corporation

RSS Feed

RSS Feed